Daniel Fine

Theatre Arts | Dance | Public Digital Arts

Tenure Dossier

Media Clown

Digital Media and Live Performance

Co-Principal Investigator, Co-Creator, Co-Producer, Director

Role:

Peer-Reviewed Outcomes/Dissemination:

June 2021

Workshop virtual/online performance of new research. Supported by grant funding from Provost Investment in Strategic Priorities Award at UI and Epic Mega Grants. ABW Media Theatre, University of Iowa.

June 2019

International Premiere Performance: Prague Quadrennial, Czech Republic, largest international exhibition and festival event dedicated to scenography, performance design and theatre architecture. Of over one-hundred and thirty applications from around the world, Media Clown was one of forty-three selected performances by a jury led by international designer Patrick Du Wors.

May 2019

Invited Performances: Four week residency at Backstage Academy, West Yorkshire, UK.

June 2019

Invited guest lecture at the School of Art, Design and Performance at the University of Portsmouth

October 2019

Invited presenter for public lecture series at The Obermann Center for Advanced Studies.

October 2020

Peer Reviewed Research Team Panel Presentation: Live Design International. (Canceled due to Pandemic)

October 2019

Peer Reviewed Acceptance for a Poster Presentation at the 2019 Movement and Computing Conference (Declined)

The total amount of grants received to date is $77,050.00.

Major grants of note include:

-

-

- $25,000.00 external grant from Epic Mega Grants, a highly competitive grant to “support game developers, enterprise professionals, media and entertainment creators, students, educators, and tool developers doing amazing things with Unreal Engine or enhancing open-source capabilities for the 3D graphics community”

- $18,000.00 development grant for Interdisciplinary Research from the Obermann Center at UI

- $2,000.00 Provost Investment in Strategic Priorities Award at UI.

-

A full breakdown of total grants awarded can be found here.

Photo Gallery:

First draft of promotion photos for PQ2019. Photo by Courtney Gaston.

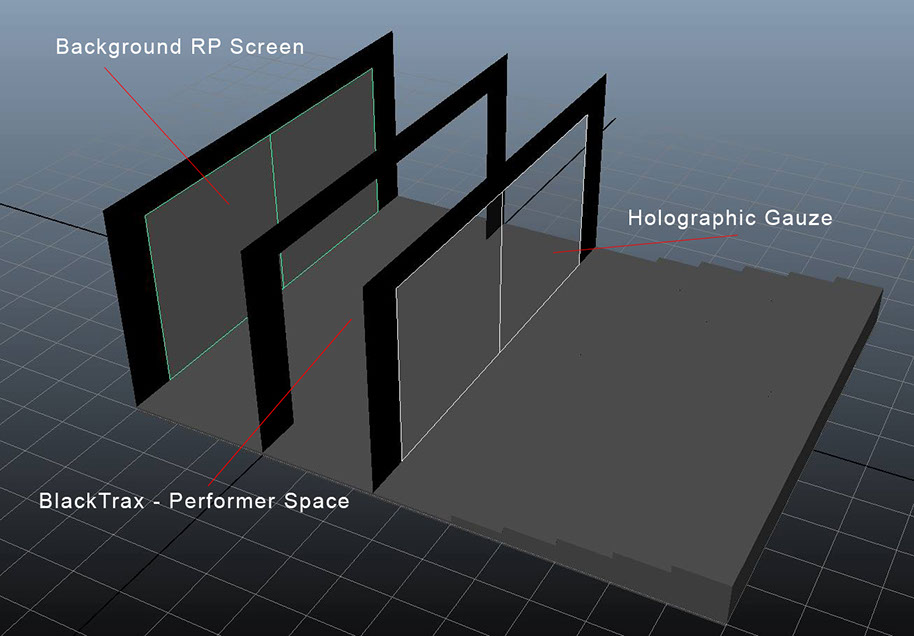

3D previsualization in Disguise media server for PQ2019.

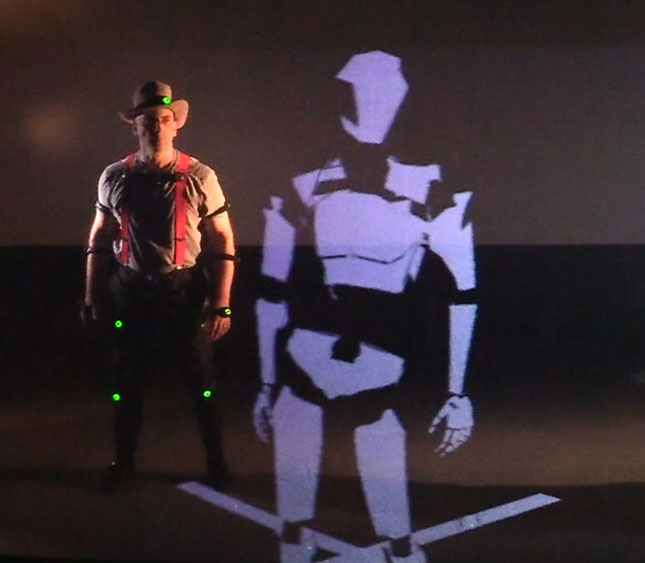

Early motion capture and projection tests.

Storyboard created by Daniel Fine for particle sequence. PQ2019.

Setting up the motion capture suit at Backstage Academy. Photo by Daniel Fine

The Iowa team at PQ2019. PIs and graduate students.

The PIs in front of a poster advertising their upcoming talk at Portsmouth University, UK.

Backstage Academy Performance. Photo by Daniel Fine

Backstage Academy Performance. Photo by Daniel Fine

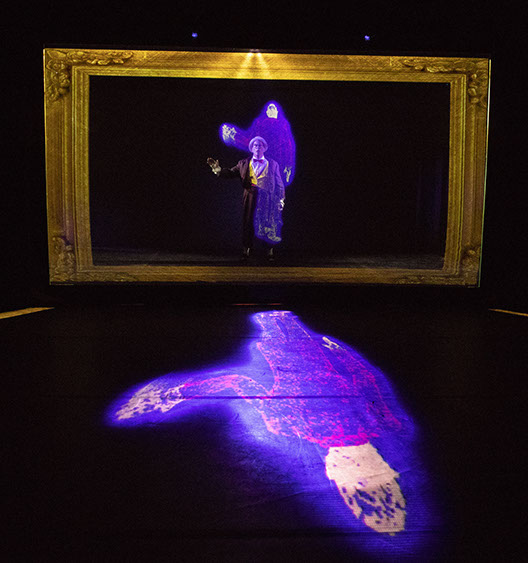

PQ Performance. Modern twist on classic Duck Soup routine. Photo by Dana Keeton

Stuck inside an iPad. PQ19 Performance. Photo by Dana Keeton

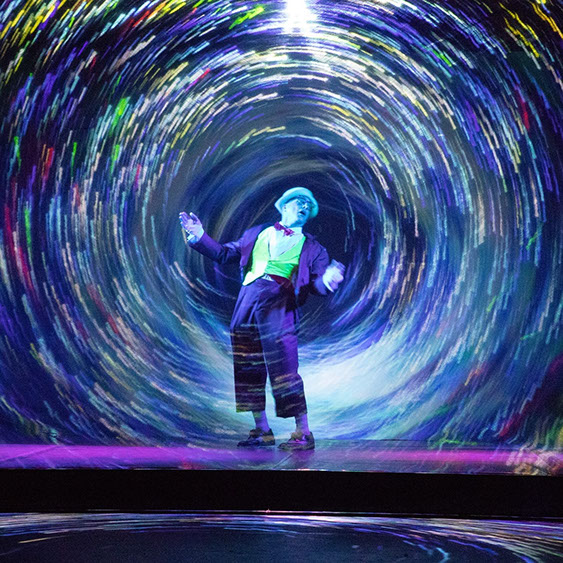

Particles swirl and moving lights auto-match color/intensity of projections. PQ19 Performance. Photo by Dana Keeton

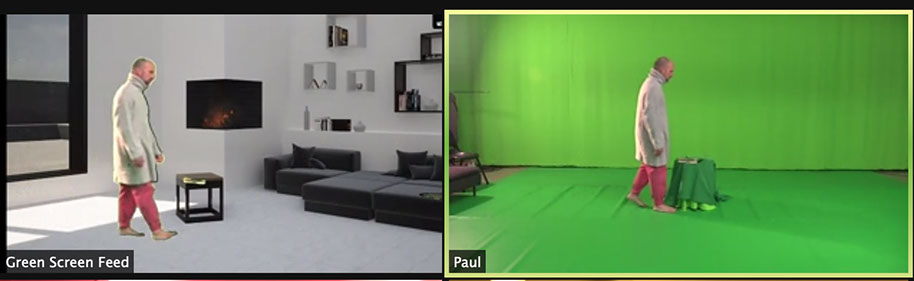

Early tests for virtual performance on Zoom. Background created in UnReal and ported to Touch Designer, where it is composited with the live video stream.

Early tests with compositing using Isadora.

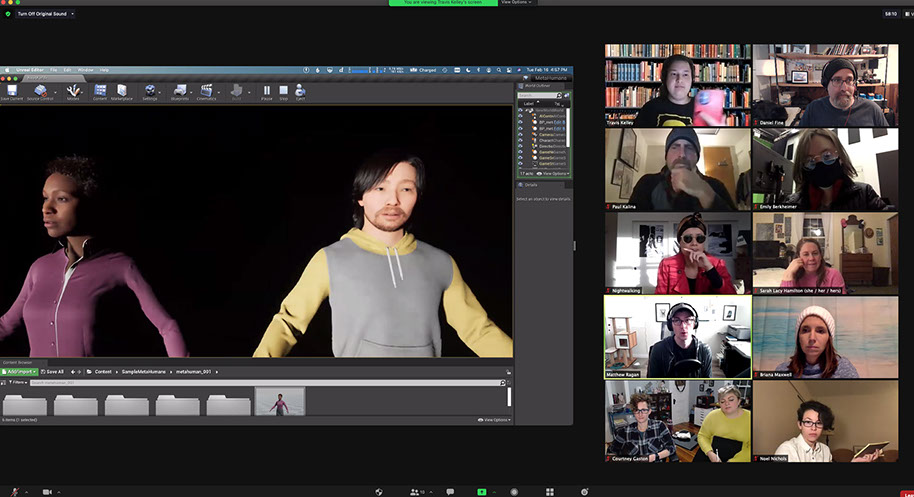

Testing Unreal's MetaHuman as an option for MoCap avatar control for the character of de.Z.

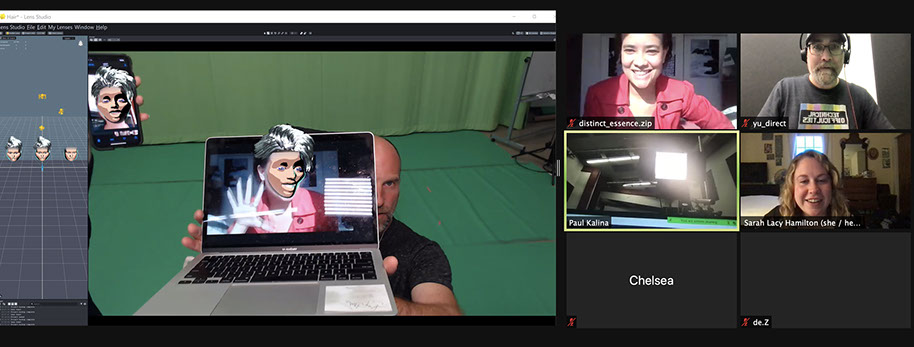

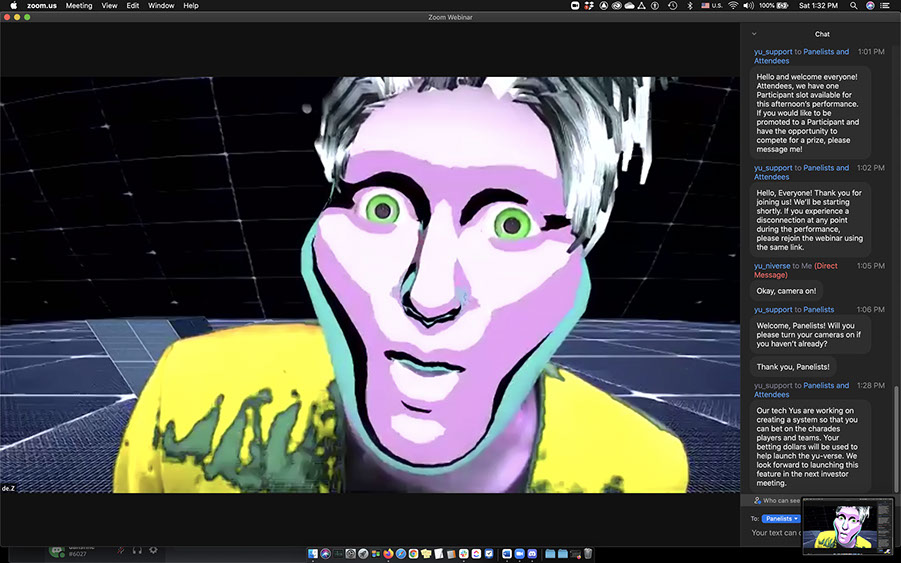

Early test using SnapCam's Lens Studio as an AR option for MoCap control of the character de.Z.

Early test using SnapCam's Lens Studio as an AR option for MoCap control of the character de.Z. Clowning with the facial recognition.

Test using SnapCam's Lens Studio as an AR option for MoCap control for ilve audiences' video feeds.

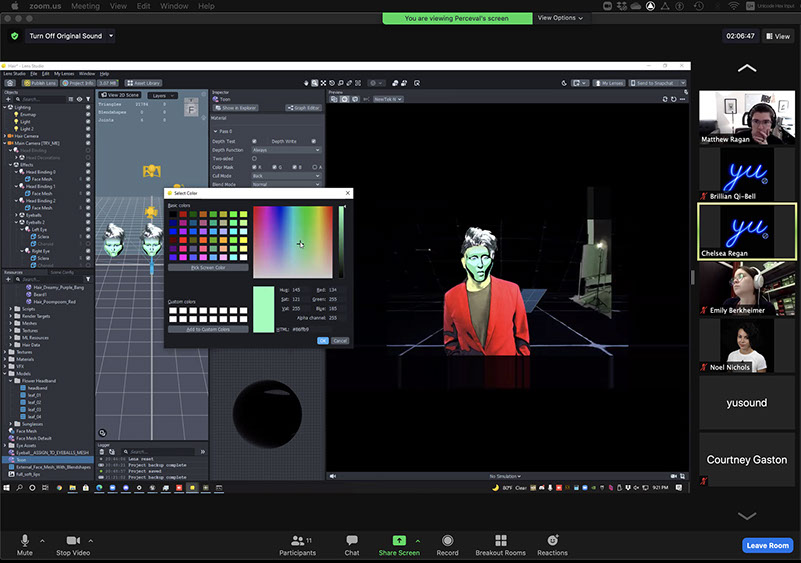

Tweaking parameters on SnapCam's Lens Studio for AR character de.Z.

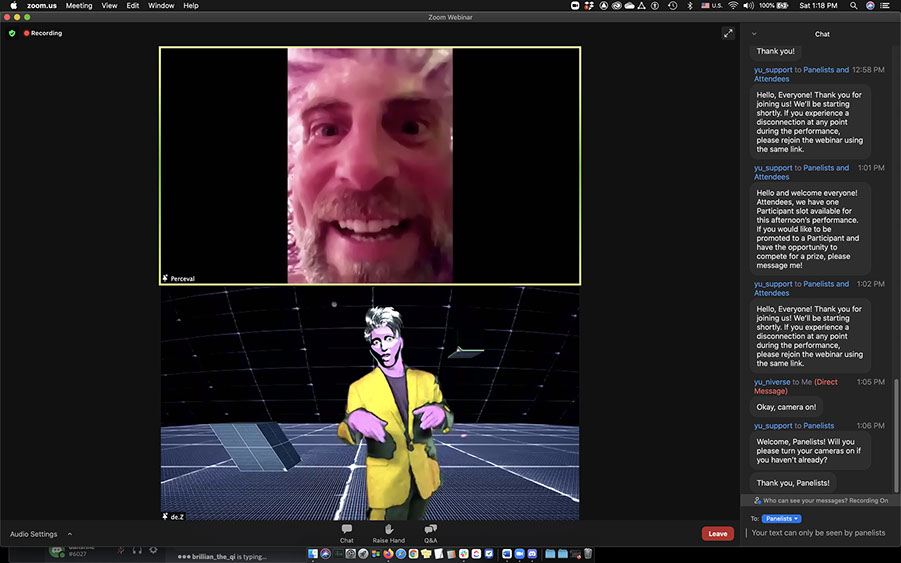

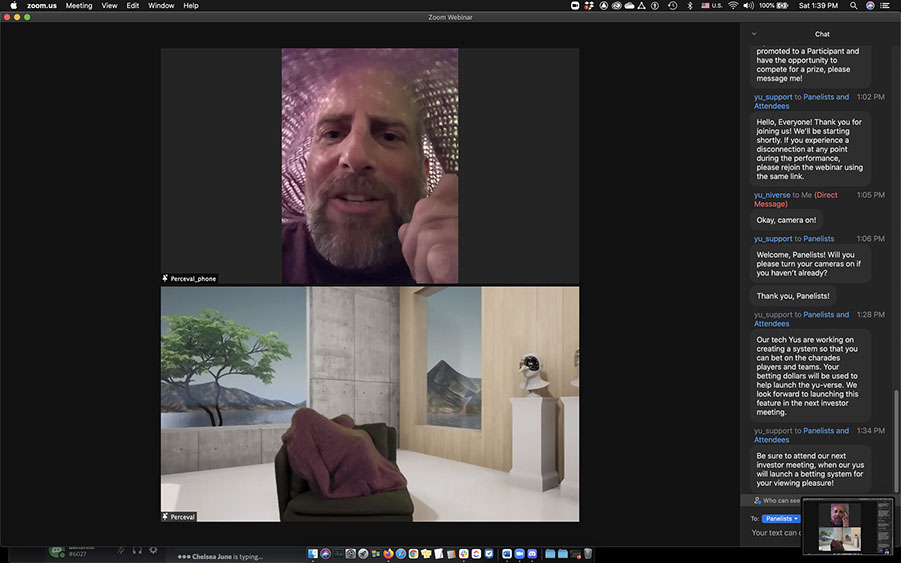

June 2020 online/virtual workshop. Precording of Paul playing character of Percy (top) and Paul (live) playing character of de.Z (bottom).

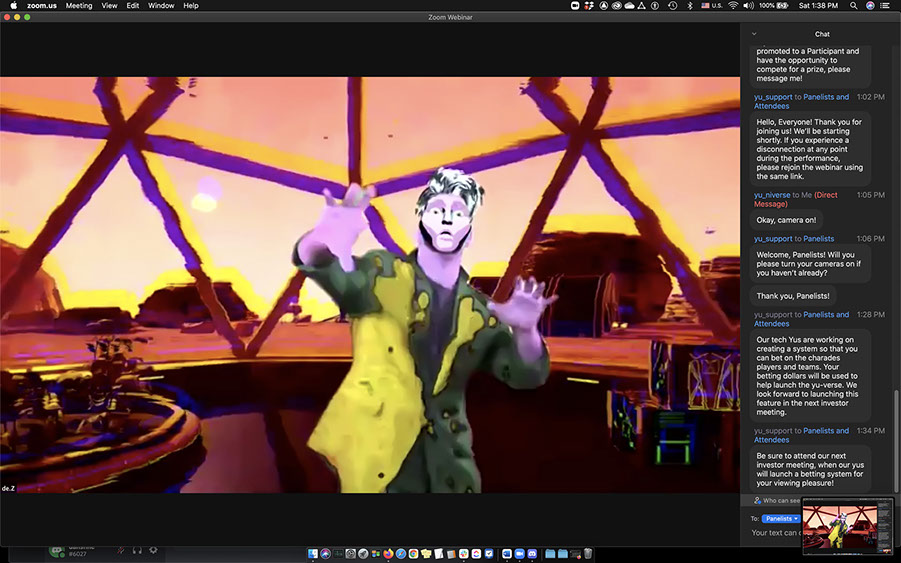

de.Z in a different background. Background created in UnReal and ported to Touch Designer, where it is composited with the live video stream. June 2021 virtual/online workshop performance.

de.Z in a different background. Background created in UnReal and ported to Touch Designer, where it is composited with the live video stream. June 2021 virtual/online workshop performance.

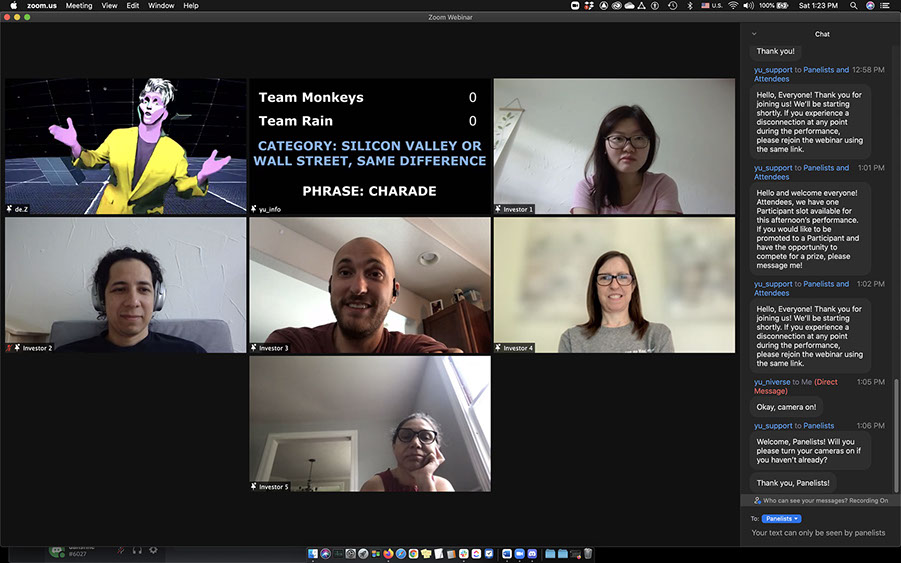

de.Z playing charades with audience members in Zoom. June 2021 virtual/online workshop performance.

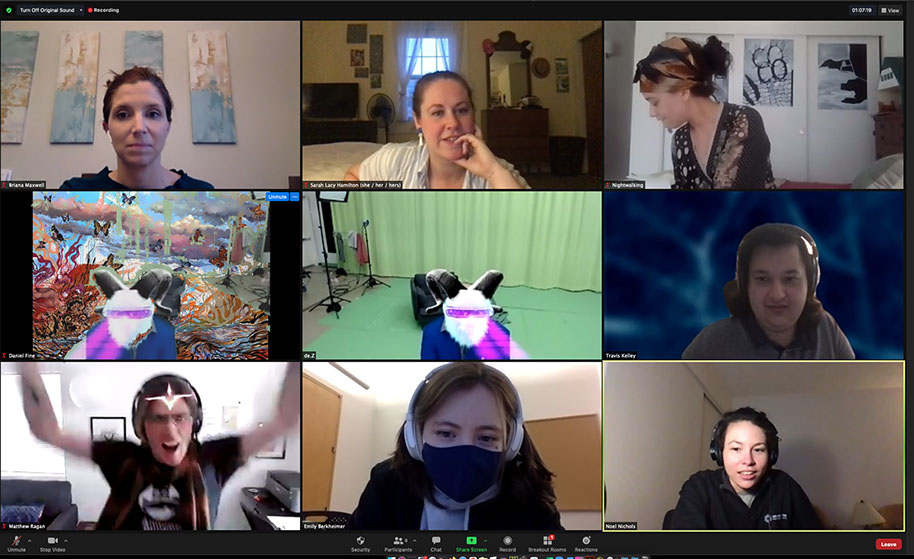

Audience members playing charades. June 2021 virtual/online workshop performance.

June 2020 online/virtual workshop performance,

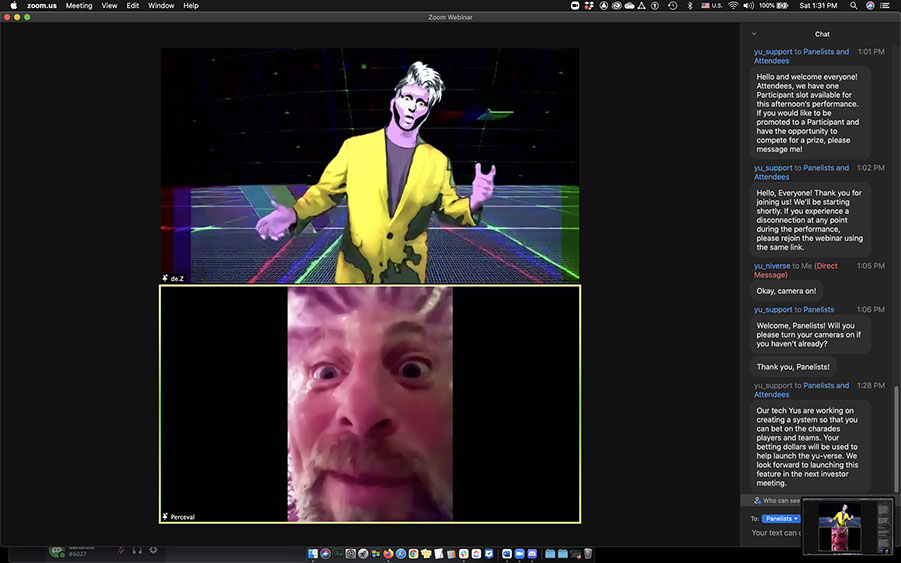

Precording of Paul playing character of Percy (bottom) and Paul (live) playing character of de.Z (top). Real-time RGB affect applied to video via a custom software controllable by an operator on a website. June 2021 online/virtual workshop performance,

Close-up of de.Z with AR filter. June 2021 online/virtual workshop performance,

Real-time decay affect applied to video (top left) via a custom software controllable by an operator on a website.

June 2021 online/virtual workshop performance,

Real-time RGB affect applied to video via a custom software controllable by an operator on a website.

June 2021 online/virtual workshop performance,

Paul playing the character of Percy. Live video-feed inside the sweater (top) with a cellphone dialed into the Zoom call and the main camera view (bottom). June 2021 online/virtual workshop performance,

Percy talking directly to audience. Background: his projected world.

June 2021 online/virtual workshop performance,

Percy talking directly to audience. Background: his real world (still a real-time background in Unreal).

June 2021 online/virtual workshop performance,

21 - 31

<

>

Videos:

Prague Quadrennial 2019 Performance:

Virtual Performance Workshop June 2021:

Backstage Tour June 2021 Virtual Performance:

Backstage/Final Composite June 2021 Virtual Performance:

Key Creative Team:

Co-PI, Co-Creator, Co-Producer, Performer: Paul Kalina

Co-PI, Co-Creator, Director of Technology: Matthew Ragan

Co-Creator, Lead Writer: Leigh M. Marshall

Co-Creator, Co-Head of Art Department, Costume & Character Design: Chelsea June

Co-Creator, Co-Head of Art Department, Physical & Virtual Set & Lighting Design: Courtney Gaston

Co-Creator, Associate Director, Director of Marketing & Alternate Storytelling: Sarah Lacy Hamilton

Head of Audio & Sound Design & Integration: Noel Nichols

Associate Director of Technology, System Integration: Travis Kelley

Assistant Director of Technology & Lens Studio Character Development: Emily Berkheimer

Stage Manager: Brillian Qi-Bell

About:

Note: writing in this section is a combination of text edited and compiled by Daniel Fine and written by:

- Daniel Fine, for this portfolio website

- Co-PI Paul Kalina and I, for various grants and promotional materials

- Co-Pi Paul Kalina, Matthew Ragan and I, for UnReal grant

- Lead-Writer Leigh Marshall, for promotional materials

I am the Co-PI with Associate Professor Paul Kalina and serve as Co-Creator, Artistic Director, Director, and Designer. Media Clown (think Keaton, not Bozo) is an ongoing, devised research project that includes live, digital, and hybrid in-person performances. This interdisciplinary research team has members from theatre, art, engineering, and computer science, with collaborators from Industry working with UI faculty, staff, current students and alumni. The purpose of the project is to integrate the newest digital technologies, specifically motion capture, Augmented Reality and real-time systems, with traditional/theatrical clown techniques. How does the analog clown remain relevant in the digital age and interact with audiences using digital technologies? What is the nature of the codependency between live, physical performance and live digital media systems? How can clown blur the lines between real and imaginary spaces while holding up a mirror to our advanced technological society?

The clown’s history and importance to community is diverse and spans the ages - from the shaman clowns (Heyokas) of the Hopi and Lakota tribes, to the court jester, to the clowns of Vaudeville (Abbott and Costello, The Marx Brothers) to the current clowns of Cirque du Soleil and those on stage like Bill Irwin and David Shiner (Fool Moon, Old Hats). The clown exists to join us to our shared humanity. Emerging and digital technologies are changing how we connect with our communities and in today’s rapidly changing world of entertainment, the centuries old artform of the analog clown is losing relevance to a modern audience. Yet the clown has always evolved with the times, from the streets to the circus ring to the theatre and eventually film (Buster Keaton and Charlie Chaplin) and television (Lucille Ball and Carol Burnett). In order for "the clown [to remain] a recognizable figure who plays transformative and healing roles in many diverse cultures” (Proctor), there is a need for the clown to evolve yet again for the native digital-generation.

One artistic strength of the clown lies in their ability to response to an audience in real time. The performer must read the audience and shift on a dime to tailor the performance on any particular night in order to create a communal experience. For this reason, live clown shows have avoided immersing their performances in technology because it was not facile enough to respond to audience reactions and make each performance unique. Media Clown tackles this problem by working with motion capture and real-time systems in order to eliminate this obstacle and expand the traditional performance space beyond the stage to include audience space, shared virtual spaces, audience control of digital avatars and other special digital media effects. According to contemporary performance scholar Adrian Heathfield, performance has shifted aesthetically from “the optic to the haptic, from the distant to the immersive, from the static relation to the interactive.” Media Clown explores the underlying ideologies at the heart of this paradigm shift by using motion capture, Augmented Reality, Virtual Reality, and new integrated real-time digital media systems to create interactive, hybrid digital/physical spaces, which allows audiences to become directly engaged and physically involved in the story, thus connecting them with the clown and each other through technology.

The first stage of the research began as a yearlong series of creative conversations in 2017 between myself and clown/Associate Professor Paul Kalina on how to integrate technology into live clown shows. The project then moved to concrete research and practical development in 2018 with the award of an Interdisciplinary Research Grant ($18,000) from the University of Iowa’s Obermann Center for Advanced Studies. After the 2018 summer intensive of developmental research, we put together a research team of three Theatre Arts graduate students and partnered with Leader/Lecturer Shannon Harvey and his students of Live Visual Design and Production at Backstage Academy (South Kirkby, UK). The research team worked virtually with students in the UK for a year to develop the integration of the technical system using motion capture to drive the performance. We spent four weeks in an invited residency at Backstage Academy in May of 2019 putting together the first version of the show. The entire cross-University research team premiered the results of the performance research/development as an official entry into at the PQ: Festival, part of the 2019 Prague Quadrennial (PQ), the largest international exhibition and festival event dedicated to scenography, performance design and theatre architecture.

Fine and Kalina raised $50,050.00 in cash and $500,000.00 in in-kind equipment short-term rentals for this performance. The sharing of the creative research at the festival garnered positive feedback from an international audience of industry leaders. As a result of the performance at PQ, Media Clown received open invitations to present the final work from Ohio State, Cal Arts and Portsmouth College UK, confirming the teams proof of concept and moving the team closer to their goal of a fully formed touring performance.

The story/narrative of this phase of the research was driven by two major elements. The first was the special skills of our clown, Paul Kalina. Since so much of the research process was about integrating various technologies and systems into live performance, we wanted to stay within the skillset that Paul was already bringing to the project as a clown. We started small and more scientific, incorporating and exploring the possibilities of different technologies one at a time and creating short vignettes based around each technology. The second driving force of the early research phase was to keep the storyline simple and straightforward so that the majority of our efforts were focused on the co-performance between Paul and the technology rather than on story and world building.

Paul had multiple types of technology embedded into his costume:

-

Blacktrax beacons: This camera-based motion capture system allowed us to explore with two things:

- Having moving lights act as intelligent follow spots, lighting Paul wherever he was onstage.

- Provide 0,0,0 in XYZ 3D space, something that the intertia, skeletal motion capture system could not provide in real-time. Knowing where the center, center, center location is of Paul in physical space and how that translates to 3D space is the only way to accurately place Paul inside the digital.

- Inertial based Perception Nueron skeletal tracking system. This wireless, markerless, WIFI based tracking system allowed Paul to control a digital avatar – or the digital clown.

- A custom-made, wireless DMX light up jacket, that at the climax of the story, connected the analog clown to the digital world.

- A wireless receiver for his ukulele to plug-into the sound system.

Paul developed a rather traditional style clown: one that doesn’t talk and constantly finds himself getting into trouble with a multitude of opportunities for physical comedy. We developed a rather straight-forward story with a score of scenes: (for further details, please see story outline/score here.) An analog ukulele musician shows up to the venue to perform a set, but everything goes wrong: he gets into snag/dance with his uncooperating music stand and finally his sheet music explodes, leaving him with no way to finish his concert. A stagehand brings him an iPad with sheet music. He doesn’t understand the digital and accidentally gets sucked into a giant version of the iPad. Two distinct playing spaces were created: one downstage where the analog clown performed and one upstage where the oversized, theatrical set of the iPad was located. The iPad set piece was faced to look like the frame of an iPad. At the rear was a Rear Projection screen and, in the front, a hologauze screen (a holographic effects screen designed to reflect projections that make it look like they are magically are appearing in air). This combination of rear and front projection, with the performer in the middle makes it appear that the performer is immersed inside the digital world of an iPad. Surrounded by an immersive digital world, the analog clown interfaced with a digital version of himself inside this giant iPad.

For decades, artists have been updating a classic Marx Brothers routine from the movie Duck Soup, which was based on an old vaudeville routine. The PQ performance built upon this lineage with a novel, modern approach to the classic mirror routine. At one point in the show an avatar appears on the hologauze that resembles the clown’s image. Through the use of motion capture the movements of the avatar mirror the movements of the clown, which is quite disconcerting for the clown and leads to a great deal of physical comedy and a twist in the end that can only be achieved through motion capture technology. The audience members unfamiliar with the Marx Brothers’ version found it surprising and hilarious and those that were familiar with the routine enjoyed the new technological version with the added knowledge of its origins.

One of the key technological aspects of Media Clown is the use of a Motion Capture system, like the technology used by films such as Avatar to capture the movements of actors to control digital avatars. For the UK and PQ performance research Media Clown used an inertia motion capture suit that utilizes the Earth’s magnetic field to map the performers body to create the digital effects on stage in real time. The motion capture data was the heart of a workflow that allowed multiple systems - video, lighting, sound - to use the location data of the performer (and audience) to create real-time digital effects. Unfortunately, the team discovered that the inertia motion capture system was very unstable when near metal or concrete because it affects the magnetic pull of the sensors and distorted the avatar’s image. The team found a temporary work-around, but a long-term, stable solution for motion capture would need to be found.

Upon returning from Prague, Paul and I began to research other types of motion capture systems. This led to Paul contacting the head of Sony Studio’s motion capture lab, Charles Ghislandi. After discussing the project, Ghislandi recommended a camera based system for skeletal tracking for its stability and consulted with the team on the best motion capture solution.

These conversations made it clear that the team was missing a collaborator who worked in advanced real-time system workflows and high-end motion capture. Fine and Kalina approached long-time collaborator, Matthew Ragan, former Director of Software at The Madison Square Garden Company. Matthew is a designer and educator whose work explores the challenges and opportunities of large immersive systems. At the forefront of his research is the development of pipelines for real time content creation and the intersection of interactive digital media and live performance. Matthew agreed to join the project as Motion Capture, Real-Time Content and Software Director.

The current round of research is funded by a $25,000.00 external grant from Epic Games and is a collaboration with industry professional Matthew Ragan and sound designer Noel Nichols, the original three graduate students who have since graduated, three additional UI recent alumni (one graduate and two undergraduates) and one current undergraduate.

In terms of story and character, we felt that it was time to create a more advanced/in-depth story world for the next phase of research. We invited UI alumni, Leigh Marshall to join the research team as lead writer. Throughout history, the clown often mocks/reflects those in power in our society - holding up a mirror to how the powerful in our society wield their control. We were curious as to who the powerful in our society are and how the clown might shed new light onto how that power affects us. In keeping with our research agenda of integrating technology into clown and live performance, our answer to this question was the technology gurus who build billion dollar tech companies. The new clown protagonist is modelled after the likes of Elon Musk, Mark Zuckerberg, Adam Newman, Jensen Huang, Jeff Bezos, and Elizabeth Holmes to name a few.

We worked with Leigh and the team for a year to develop new characters and a new storyline. Our plan was to embed technology into the meaning-making of the show. The new clown character, Perceval, an eccentric tech guru, has invented the biggest thing since sliced bread - the yu (pronounced you), a humanoid holographic avatar that can physically inhabit the three-dimensional world. We developed an outline for 5 act structure for a live, in-person performance. See here for a timeline of the story world and here for an outline for the five act structure.

PERCEVAL is an intensely introverted entrepreneur with ADHD, a raw idealist who doesn’t protect himself or his ideas with a fortress-like social facade (the drawbridge is always down, so to speak). His physical and virtual space is curated to suit his own particular brain chemistry - he accumulates things that comfort and interest him, surrounds himself with clocks/timers, archaic cell phones - his mind goes mile-a-minute with big ideas but when he’s in public (or has an audience) he gets like a deer in headlights. His shyness, however, comes with a massive ego, so he tries to cultivate an air of mystery around his image in order to comfortably satisfy his desire to be a public figure - this also allows him to obscure information he wants no one to ever know, like his humble background (he comes from someplace like Peoria or Detroit).

de.Z was created by Perceval to be the Ideal Perceval: suave, articulate, a popular extravert. He’s the embodiment of a sex jam with a goldfish brain. The Hugh Hefner of Big Tech. Highly developed but still a bit glitchy.

A key component to clown and how Paul works is improvisation and audience interaction. In keeping with the liveness and the improvisation of clowning and live performance, we explored various methods to create dialogue/script written through Machine Learning. We collected several databases, such as tech start-up advertising campaigns, tech guru speeches/talks/product launches, technology product disclaimers, etc, and fed those databases into a machine learning AI (Artificial Intelligence) to create different models. We then gave the models different prompts, which provided different results. The results become the text that is spoken by de.Z. By creating a high-end chatbox through Machine Learning, that is able to respond to our live performer and audience in real-time, we maintained the improvisational element so important to clown and live performance work.

Ultimately, due to Covid Pandemic, we had to shift to a real-time, virtual performance, putting the Machine Learning research and the development of the live, in-person theatrical event on hold. For a year during the Pandemic, the team worked on a virtual performance, which had a workshop/proof of concept series of performances in June 2021. The new clown character, Perceval, the eccentric tech guru, who invented the Yu, hosted a Game [The System] Night fundraiser - A Night of You - to raise capital and gain investors for his brilliant invention. During the live-Zoom event, he will get to show off his own personal yu, and demonstrate exactly why you should invest early in this lucrative idea. Problem is, the yu isn't actually perfect yet - and when it is, it'll change the game forever. Learn more about the yu and Perceval here.

We researched various Motion Capture technology systems and real-time avatar pipelines, such as UnReal’s MetaHuman. Ultimately, for the virtual performance we decided to use Snap Cam, an Augmented Reality system to create a custom avatar for the protagonists representation of their Yu. The team, worked remotely via multiple online collaborative and real-time tools and video conference systems to:

- Build a real-time 3D virtual production pipeline for live production. Using current extended reality (XR) techniques, we created a workflow for the remote clown to be filmed in front of a greenscreen and then composited into a virtual 3D environment, have an AR filter applied in real-time and then streamed to live audiences via Zoom.

- Create a prototype online/virtual performance system for research/rehearsals and performance to include a workflow with Unreal Engine, Touch Designer, Snap Cam (AR digital avatar), QLab, Dante Virtual Audio, and Zoom.

- Create a Covid-safe, remote/virtual production studio in ABW250 for interactive, real-time performance in Zoom.

- Create prototype audience participation system for online/virtual show.

- Experiment with alternate story-telling modalities including text and email of Tik-Tok videos to audience members, pre and post show.

What we discovered in this virtual performance space was an exciting new, multi-modal approach to story-telling that could include live virtual events and in the future live in-person events. The future of the research continues to evolve, with the new storyline and explorations in Motion Capture, Machine Learning, Artificial Intelligence, Augmented Reality, and Virtual and in-person performances. We plan to integrate the newly acquired motion capture system at the University of Iowa Theatre Arts department into a workflow to track the live performer, props and the physical camera to align with the virtual world.

Funding to Date:

| Amount | Source | Note | Year |

|---|---|---|---|

| $ 25,000.00 | External: Epic Mega Grant | Co-PI, awarded for project | 2020 - |

| $ 2,000.00 | Provost Investment in Strategic Priorities Award. University of Iowa | Awarded for summer research stipend for Co-PI Daniel Fine | Summer 2021 |

| $ 2,000.00 | Theatre Arts Department. University of Iowa | Awarded to Co-PI Assocaite Professor Paul Kalina for summer research stipend. | Summer 2021 |

| $ 4,900.00 | Public Digital Arts Cluster Faculty Research Grant. University of Iowa | Awarded to Co-PI's for project. | 2019 |

| $ 5,000.00 | Arts Across Border Grant. University of Iowa | Awarded to Co-PI's for project. | 2019 |

| $ 2,000.00 | Theatre Arts Travel Grant. University of Iowa | Travel funds to UK/PQ19 for co-PI Daniel Fine | 2018 |

| $ 2,000.00 | Theatre Arts Travel Grant. University of Iowa | Travel funds to UK/PQ19 for Co-PI Paul Kalina | 2018 |

| $ 1,000.00 | Dance Department.Travel Grant. University of Iowa | Travel funds to UK/PQ19 for Co-PI Daniel Fine | 2018 |

| $ 600.00 | Theatre Arts Felton Foundation Funding. University of Iowa | Travel funds to UK/PQ19 for Grad student Chelsea Regen | 2019 |

| $ 600.00 | Theatre Arts Felton Foundation Funding. University of Iowa | Travel funds to UK/PQ19 for Grad student Courtney Gaston | 2019 |

| $ 600.00 | Theatre Arts Felton Foundation Funding. University of Iowa | Travel funds to UK/PQ19 for Grad student Sarah Hamilton | 2019 |

| $ 1,350.00 | Margaret Hall Scholarship Fund. Theatre Arts. University of Iowa | Research funds for Grad student Chelsea Regen to use on the project. | 2019 |

| $ 1,350.00 | Margaret Hall Scholarship Fund. Theatre Arts. University of Iowa | Research funds for Grad student Courtney Gaston to use on the project. | 2019 |

| $ 1,200.00 | International Programs. University of Iowa | Travel funds to UK/PQ19 for Co-PI Paul Kalina | 2019 |

| $ 1,100.00 | International Programs. University of Iowa | Travel funds to UK/PQ19 for Co-PI Daniel Fine | 2019 |

| $ 1,700.00 | Theatre Arts Summer Travel Grant. University of Iowa | Travel funds to UK/PQ19 for Grad student Chelsea Regen | 2019 |

| $ 1,700.00 | Theatre Arts Summer Travel Grant. University of Iowa | Travel funds to UK/PQ19 for Grad student Courtney Gaston | 2019 |

| $ 1,700.00 | Theatre Arts Summer Travel Grant. University of Iowa | Travel funds to UK/PQ19 for Grad student Sarah Hamilton | 2019 |

| $ 500.00 | Graduate & Professional Student Government (GPSG) Grant. University of Iowa | Travel funds to UK/PQ19 for Grad student Sarah Hamilton | 2019 |

| $ 250.00 | Graduate & Professional Student Government (GPSG) Grant. University of Iowa | Travel funds to UK/PQ19 for Grad student Chelsea Regen | 2019 |

| $ 2,500.00 | Graduate & Professional Student Government (GPSG) Grant. University of Iowa | Awarded to Co-PI Assocaite Professor Paul Kalina for project. | 2018 |

| $ 18,000.00 | Obermann Center for Advanced Studies Interdisciplinary Research Grant. The University of Iowa |

Awarded to Co-PI's for stipend for summer research. Pi's donated all stipend (post taxes) to research project. |

Summer 2018 |

| $ 77,050.00 | TOTAL TO DATE |

Teaching:

This project offers current and former students of the University of Iowa, as well as students from Backstage Academy in 2018/2019 a diverse pedagogical and practical experience where they contribute their creative, technological and organization skills in faculty-led research. This research pays students and alumni stipends and allows them to contribute at the highest levels to one of the first international projects to bring the power of the analog clown into the digital age. Student researchers are supported through ICRU (Iowa Center for Research by Undergraduates) funding.

As a direct result of the research project’s use of motion capture technology, it became clear to Paul and I that performing with motion capture is an essential new skill that student actors need to have access to and training with in order to be competitive in the industry upon graduation. Along with Professor Bryon Winn, we co-wrote and received $555,987 grant from UI Student Technology Fees (STF) to create a mobile motion capture (MOCAP) studio for students in the Department of Theatre Arts, with a clear potential for cross-disciplinary collaboration between undergraduates in several departments and colleges within the University. Our goal is to introduce motion capture performance techniques to every student in the Department of Theatre Arts regardless of theatrical discipline. We view this acquisition of new technology as a fundamental requirement for preparing our students for professional careers in the entertainment industry of the future and supporting our mission of innovation in the area of Digital Arts. With access to and training on MOCAP, and related technologies, our students will be positioned to join a competitive workforce that revolutionizes how stories are told and allows artists to create new experiences for modern audiences.

Supporting Documents:

Link to: Prague Quadrennial Invitation Letter

Link to: Working Draft of PQ Story Outline

Link to: Obermann Center 2018 Interdisciplinary Research Grant Application

Link to: Obermann Center 2018 Interdisciplinary Research Award Letter

Link to: Submitted AHI Major Grant Application

Link to: Submitted International Programs Summer Fellowship Grant Application

Link to: Submitted MidAtlantic International Artists Grant Application

Link to: Submitted Public Digital Arts Grant Application

Link to: Submitted Creative Matches Grant Application

Link to: One Page Return on Investment Letter to PixMob

Link to: Project One Page for Industry Support

copyright 2021