Daniel Fine

Theatre Arts | Dance | Public Digital Arts

Tenure Dossier

Live Performance in Shared Virtual Worlds

Virtual Reality & Live Performance

Co-Principal Investigator, Artistic Director, Co-Creator, Digital Media & Experience Design

Role:

Peer-Reviewed Outcomes/Dissemination:

February 4-12, 2020

Invited Performance and Residency

Advanced Computing Center for Arts and Design

The Ohio State University

Columbus, Ohio

December 2018

Performance: Mabie Theatre,

University of Iowa, Iowa City, IA

March, 2021

Invited Speaker, Conference Presentation

Virtual Performance Series: 3D Environments:

Motion Capture and Avatars

USITT 21 Virtual Conference and Stage Expo

June 16, 2020

Invited Solo Speaker, Conference Presentation

Immersive & Mixed Reality Scenography: Designing Virtual Spaces

AVIXA at InfoComm: The Largest Professional Audiovisual Trade Show in North America

Las Vegas, Nevada

Cancelled due to Covid

April 4, 2020:

Speaker on panel Immersive & Mixed Reality Scenography: Designing Virtual Spaces.

USITT 20 Conference & Stage Expo

Columbus, Oh

Cancelled due to Covid

November 2019

Invited Solo Speaker: VR and Live Performance

LDI Tradeshow and Conference

Las Vegas, Nevada

June 9, 2019

The total amount of grants received to date is $52,000.00.

Major grants of note include:

-

-

- Two rounds of funding from UI OVPR (Office of the Vice President for Research) Creative Matches grant totaling $23,000.00.

- Currently a three year grant proposal for $499,956.00 is being worked on for resubmission to the National Science Foundation (NSF).

-

A full breakdown of total grants awarded can be found here.

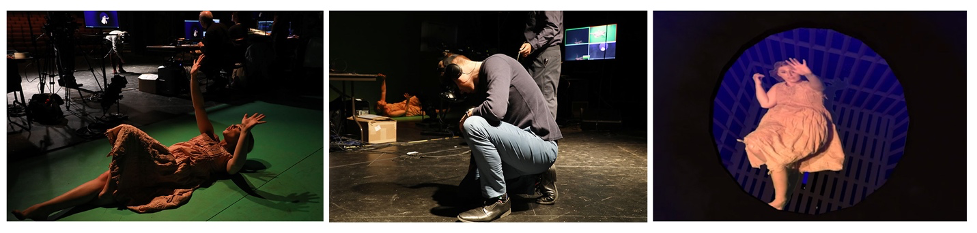

Photo Gallery:

Haptic feedback: A user stands next to a fire in the virtual world while a space heater blows hot air on her in the real world in Iowa Performance of Elevator #7.

A user looks down into a hole in the floor in the virtual world to see a live performer below him. Performer is on a greenscreen in the background. Iowa Performance of Elevator #7.

Live performer on a greenscreen. Physical camera is mounted above. Videostream is on a plane below the floor in the virtual world. Iowa Performance of Elevator #7.

Left: A downlooking video camera captured Casandra. Middle: Player looking down at Casandra. Right: Composited 2D video stream in a hole in the virtual environment from the player’s view. Iowa Performance of Elevator #7.

Composite of the actors viewed the player (in physical form) and a display showing the player's view in VR. Iowa Performance of Elevator #7. 2

A user talks to the performer in Iowa Performance of Elevator #7.

User sees the live performer composited into the virtual world in Iowa Performance of Elevator #7.

User talks to a live performer in Iowa Performance of Elevator #7.

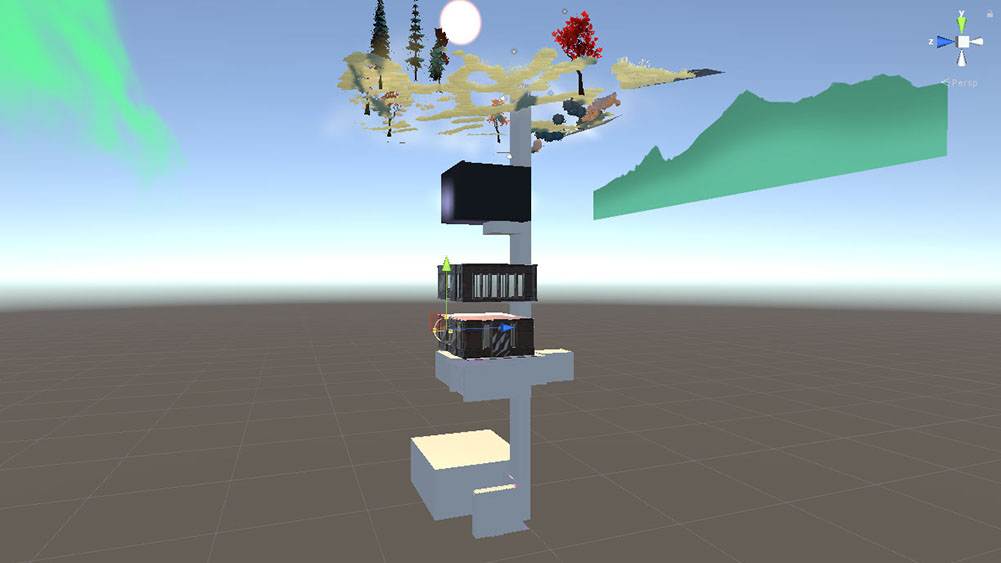

Rendering of the 3D model (built with 3Ds Max) inside Unity for Iowa Performance of Elevator #7.

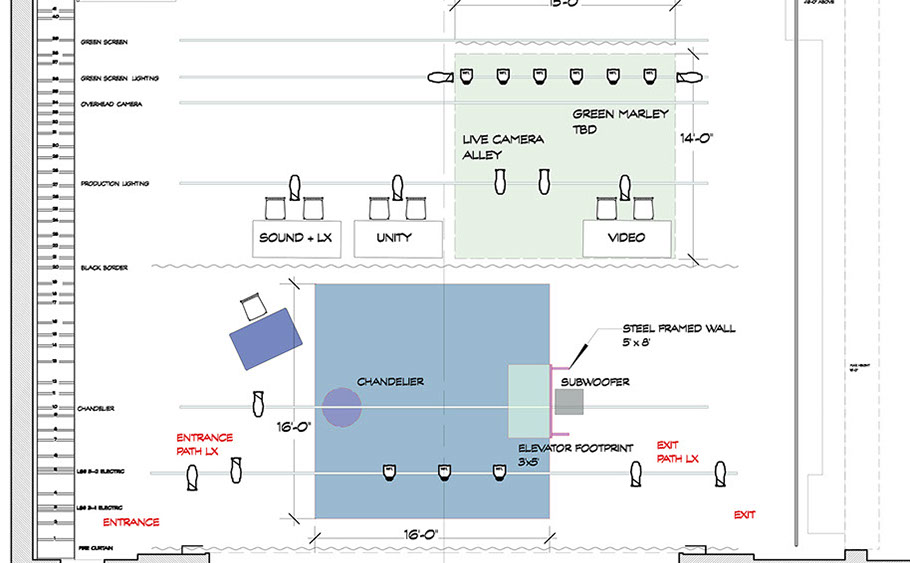

Ground plan of stage for audience experience for Iowa Performance of Elevator #7.

Audience watches a user at OSU Performance of Elevator #7.

Haptic feedback of real flowers falling on a user as the user sees flower falling in the headset. OSU Performance of Elevator #7.

OSU Performance of Elevator #7.

Live audience at OSU Performance of Elevator #7.

User puts on trackable boots (via a Vive puck) at OSU Performance of Elevator #7. User sees digital boots in the headset.

Iowa and Ohio team for OSU Performance of Elevator #7.

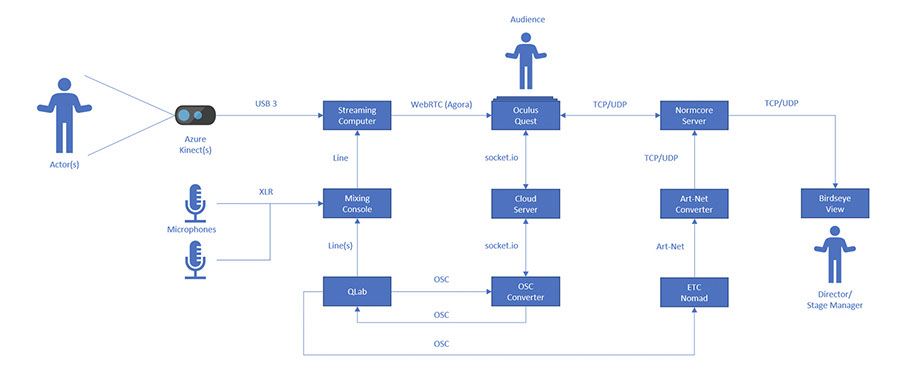

System diagram to enable one-to-many 3D video streaming of actors and multiple, remote audience members.

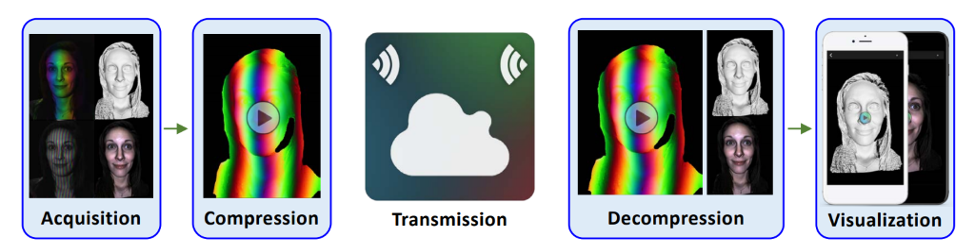

Pipeline for 3D video streaming for distributed VR. The Acquisition Module captures/produces 3D range data/color texture in real-time. Compression Module encodes depth/color data into a standard 2D video codec that is transmitted within a low-latency video stream via WebRTC. On the remote device, the Decompression Module decodes depth and color data to reproduce the 3D range data. Finally, the Visualization Module allows the received 3D video data to be displayed and interacted with in real-time.

Example data frame captured from the Microsoft Azure Kinect (DK). Left: Color texture image; Middle: Depth map with distance-based threshold applied; Right: Colored 3D reconstruction of the depth map with a point-based “hologram” special effect applied in the rendering.

For distributed VR.

17 - 19

<

>

Videos:

Highlight video by UI Iowa Now

Performance of Elevator #7

Creative Team:

Faculty

Joseph Kearney: co-creator, virtual reality technical director

Daniel Fine: co-creator, artistic director, director, live video

Tyler Bell: co-creator, depth image and Unity development

Alan MacVey: co-creator, writer, director

Bryon Winn: co-creator, lighting & sound design

Monica Correia: co-creator, 3-D design

Joseph Osheroff: primary performer

Students (Former and Current)

Emily, BS Informatics (paid ICRU position)

Brillian, BA Theatre Arts (paid ICRU position)

Travis, BS Engineering (paid ICRU position & consultant fees)

Jacob, BS Engineering (paid ICRU position)

Joe, BFA 3D Design (paid ICRU position)

Runqi Zhao, Computer Science (paid ICRU position)

Yucheng Guo, BFA 3D Design (paid ICRU position)

Ted Brown, BA Theatre Arts (paid hourly via Creative Matches grant)

Marc Macaranas, MFA Dance (paid hourly via Creative Matches grant)

Sarah Gutowski, MFA 3D Design (paid hourly via Creative Matches grant)

Xiao Song, BS Computer Science (paid hourly via Creative Matches grant)

Ryan McElroy, BA Theatre Arts (paid hourly via Creative Matches grant)

Chelsea Regan, MFA Costume Design (paid hourly via Creative Matches grant)

Ashlynn Dale, BA Theatre Arts (paid stipend via Creative Matches grant)

Octavius Lanier, MFA Acting (paid stipend via Creative Matches grant)

Chris Walbert, BA Theatre Arts (paid stipend via Creative Matches grant)

Shelby Zukin, BA Theatre Arts (paid stipend via Creative Matches grant)

Derek Donnellan, BA Theatre Arts (paid stipend via Creative Matches grant)

Courtney Gaston, MFA Design

Nick Coso, MFA Design

Note: writing in the sections below are a combination of text edited and compiled by Daniel Fine and written by:

- Daniel Fine, for this portfolio website

- Co-PI Joe Kearney, Alan MacVey and I, for Creative Matches grants

- Co-Pi Joe Kearney and Tyler Bell, Alan MacVey, and I, for NSF grant submission

About:

A multi-year research project of faculty and students at the University of Iowa departments of Theatre Arts, Dance, Computer Science, Art & Art History, and Engineering that explores the emerging collision of live performance, 3D design, computer science, expanded cinema, immersive environments, and virtual worlds by creating live performances in shared virtual and physical worlds. This research develops new ways to create immersive theatrical experiences in which multiple, co-physically present and remotely-located audience members engage with live actors through 3D video streams in virtual and hybrid physical/digital environments. To achieve this, the team devises original creative experiences, develops robust, real-time depth compression algorithms and advanced methods to integrate live 3D video and audio streams into spatially distributed, consumer VR systems.

In addition, the research integrates live-event technology, used in professional theatrical productions, to interactively control lighting, sound, and video in virtual reality software and technology. This empowers VR designers to create rich, dynamic environments in which lighting, sound, and video cues can be quickly and easily finetuned during the design process, and then interactively adjusted during performances. By targeting commodity-level VR devices, theatrical productions would be available to those who live in remote locations or are place-bound; to those with restricted mobility; and to those who may be socially isolated for health and safety reasons. More broadly, this research explores ways to advance remote communication and natural interaction through the use of live 3D video within shared virtual spaces (i.e., telepresence). Such advances have potential for great impact on distance training and education, distributed therapy, and tele-medicine; areas whose needs have been accelerated by the current pandemic.

History:

In 2017 Professor Joseph Kearney of Computer Science and Professors Alan MacVey and Bryon Winn from Theatre Arts had an informal meeting to discuss a collaboration with live performance and virtual reality. Professors Kearney, MacVey and Winn invited me to join the group. I brought in a few other junior faculty from Art and Art History and Engineering. We met for several months, brainstorming ideas and what a collaboration might look like. Given my experience with the digital arts and immersive technologies, I was invited to co-lead the informal faculty group along with Professor Joe Kearney.

Working as Co-PI’s Professor Kearney and I led the charge in writing a grant for UI Creative Matches program. We were awarded $12,000.00 in funding and secured additional funding from ICRU to hire undergraduate researchers. A few of the initial faculty in our informal group dropped out and a few others came on board, including Professor Monica Coreia from Art and Art History and Lecturer Joseph Osheroff from Theatre Arts. For a year we worked together, along with graduate and undergraduate students from CS, Dance, Theatre, Art and Art History, to develop our first in-person performance.

In December of 2018, we mounted Elevator #7, a room-scale, mixed-reality, live experience on the stage of the Mabie Theatre at the University of Iowa. Based on the success of this research, we were invited by Creative Matches to apply for another round of funding. We were awarded an additional $13,000 from Creative Matches. The team was interested in more advanced methods of filtering (keying) out the background of a live performer than the traditional greenscreen method we were using. We invited newly hired Public Digital Arts (PDA) Cluster faculty Assistant Professor Tyler Bell of Engineering to join our faculty research group to lead the development of advanced depth-filtering methods.

Our informal interdisciplinary faculty research project was now becoming an ongoing, multi-year exploration into the principles of producing live performance/VR experiences; the methods of integrating live actors into virtual worlds; novel ways to create the scenography of real and virtual worlds, including live image streams and physical props that provide haptic feedback; and technical solutions for unleashing the possibilities of storytelling and performance design within VR.

We needed a dedicated facility where we could set-up a small research lab. We were granted the interim use of a small space in the Old Museum of Art building. Having a shared space where we could base our research was crucial to the success and continuation of the collaboration. Around this same time, we were invited to remount Elevator #7 for a week artist residency and series of public performances at the Advanced Computing Center for Arts and Design at The Ohio State University in early February 2020.

By the end of March 2020, nearly all live performances in the United States were shut down due to the Coronavirus pandemic. Undaunted, technologists and artists responded by using live streaming on the internet to entertain and inspire audiences who were isolated at home. While streaming technology has been available for some time, it had not been widely used for live performance. Now, suddenly, with no assurance of when live performing arts would again be possible, a world-wide experiment was/is being conducted using live video streaming to engage audiences and to create entertaining and meaningful artistic events. However, the limitations of video streaming soon became apparent: Traditional 2D video streaming flattens communications and imprisons performers inside the rectangle of a screen; it is a far cry from actually “being there.”

The research we already were conducting seemed like a natural pivot to a new experience, where audiences and performers could connect with each other in meaningful ways, from a distance and in real-time with realistic, streaming 3D video telepresence in shared virtual worlds. All in-person, on-campus research labs were shut down due to University Covid policy, so we dismantled our lab, distributed existing and new equipment to all team members and purchased Oculus Quests Head-Mounted Displays (HMDs) (our previous research development was on the HTC Vive) for all members of the team. It took several months to setup a remote workflow using multiple virtual tools. We began initial experimentations and started development on a new, networked live VR performance. Simultaneously, we spent six months writing a half-million dollar National Science Foundation grant (still under review) to fully develop the use of live 3D video to capture and deliver digital representations of live performers, costumes, and props into a networked virtual reality (VR) environment.

Our work takes inspiration from the long history of immersive theater, an art that is as old as western drama itself. The arts are embracing VR and Augmented Reality (AR) as exciting media for storytelling and creative expression. Virtual Reality has come to play a role in some of the most innovative immersive theatrical experiments. To our knowledge there is very little research being conducted that utilizes live 3D video streams of actors into theatrical VR experiences. The majority of these works depend on the technical solution of live actors controlling virtual avatars via motion capture. Audiences then meet these live digital avatars in shared virtual worlds. The immersive and interactive possibilities of VR are stimulating artists and technologists to find ways to give audiences new ways to participate in stories that unfold around them.

Preliminary Research: Elevator #7:

For over two years the team has experimented with VR-based theater in a production entitled Elevator #7. Our goal was to create an immersive theatrical experience in which audience members engaged with live actors in a virtual world. Live actors are the soul of any theatrical production. They bring life to the stage and interpret the script. In immersive theater (whether in virtual or physical locations) audience members participate in the action and actors must respond to their unpredictable behavior.

A key challenge for immersive VR theater is how to incorporate live actors into the virtual world. A common approach is to create graphic avatars that mimic the movements of actors. This requires real-time motion tracking which can be cumbersome, expensive, and brittle. Moreover, graphic avatars can look artificial and creepy. This phenomenon, known as the un-canny valley, happens when humanoid characters appear nearly real, but strangely artificial. [14]

Our approach used green screen technology to embed actors in the virtual world. Actors were captured on video performing in front of a green screen; the background was chroma-keyed out in real-time and the video was then displayed on a video plane in the virtual world. The player saw the actor embodied in the virtual environment.

The Setup

The script for our initial production of Elevator #7 centered around a tour of a vintage hotel. The in-person experience took place on the stage of a theater. Drapes were hung to enclose the tracking space and hide the green screen and technical stations from the player (see Fig. #). The players were greeted in the lobby of the theater and escorted into the enclosed space that was roughly furnished as a hotel reception area. The player donned a VR headset and noise cancelling headphones and found themselves in the lobby of a virtual hotel that aligned with the physical set. The hotel manager guided the player to multiple floors using a virtual elevator to move from floor to floor and finally to a rooftop garden. We chose to have players walk about the tracking space of the HTC Vive (~16 ft. sq.) without using hand controllers. The goal was to make movement as natural and intuitive as possible.

The Technology

The project was built on the Unity3D gaming platform. Players wore an HTC Vive head-mounted display (HMD). As described above, actors stood in front of a green screen adjacent to a 16 ft. by 16 ft. tracking space. Cameras were mounted in front of the green screen for front facing video and above a green screen floor for down-facing video. A video switcher selected the active camera. Audio consisted of live audio from the actors' microphones as well as prerecorded clips (e.g. the sound of the elevator door opening or closing).

A collection of Unity scripts controlled the dynamic properties of the production activated by keyboard cues. This included opening and closing doors, turning on and off sound clips, video planes, and lights, virtually shaking the elevator, and activating particle systems. A member of the production manually activated scripts based on cues from the actors at key points in the play.

We experimented with the orientation and scale of the image planes. We also experimented with layering video streams to create an illusion of 3D by placing a pre-recorded video on a video plane in front of another plane with a live video stream on it, analogous to multiplane animation developed by Disney Studios to create the illusion of depth by moving a background image relative to a foreground image.

A second version of the production was created over the next year with a richer story and additional technology. This version was performed twenty-four times during a week-long residency at The Advanced Computer Center for the Arts and Design (ACCAD) at The Ohio State University in February, 2020.

Current Research: Distributed VR

The aim of our current method of research is to explore the technical and artistic possibilities of live, immersive theatrical productions in which actors and multiple players (audience members), despite their remote physical locations, are co-present in VR. Determined to link technology and live performance in new ways, we began work on three new objectives:

- Objective 1: Develop robust algorithms and platforms for streaming live 3D video of actors with low-latency and multi-channel audio into shared virtual spaces.

- Objective 2: Create technical and artistic solutions for multiple players, who are in remote physical locations distributed across the internet, to engage with and be co-present with live 3D actors in a networked virtual world.

- Objective 3: Integrate live-event technology with VR technology to provide access to powerful and easy to use interfaces to interactively control lighting, sound, and video in VR.

Objective 1: Develop robust algorithms and platforms for streaming live 3D video of actors with low-latency and multi-channel audio into shared virtual spaces.

For two years, our method for integrating live performers into the virtual world used a traditional greenscreen cinematic technique. While the green screen video produced a compelling sense of co-presence with the actor, it appeared distorted when viewed from locations in VR that were far from the camera axis. We discovered early in our research that the spatial relationship between the participant and the video plane on which an actor was displayed had a large impact on the effectiveness of the video. When the viewer's line of sight was more nearly perpendicular to the plane on which the actor was displayed, the actor seemed well integrated into the scene. Moreover, it wasn't apparent that the actor was, in fact, flat. Small turns and motions by the actor aided the illusion that the actor was embodied in the virtual environment. However, when the video planes were viewed from an oblique angle, the actor's image appeared distorted. We experimented with using billboards to control the viewing angle (i.e., planes that rotate about the vertical axis so that they always face the viewer); however, billboards can lead to aberrant movement of the actor. If the actor is centered in the video, the rotation of the image plane makes the actor appear to spin about the axis of rotation. If the actor is off center in the image plane, the rotation of the plane causes the actor to appear to swing about the center of the plane as the plane rotates.

To address these issues, we began low-level tests to leverage a 3D video streaming pipeline for the purpose of wirelessly delivering low-latency 3D video to virtual environments. This would allow the actor to be captured in three dimensions and then delivered to the viewer in virtual reality, enabling more natural perspectives and interactions. Recent research by Co-PI Bell has demonstrated such pipelines in the context of delivering 3D measurement data to mobile devices and AR environments. These pipelines include several steps, including: (1) 3D data acquisition, (2) 3D data encoding and compression, (3) low-latency 3D video streaming, (4) 3D video decoding, and (5) 3D video visualization.

We used the Microsoft Azure Kinect Developer Kit (DK) to provide a real-time feed of 3D range data, with associated 2D color texture, of an actor. Depth imaging devices, like the Kinect, typically provide 3D information within a depth map that uses 16 or 32 bits-per-pixel of precision. While this precision can provide accurate depth information to programs it is often difficult to efficiently stream over standard wireless networks. To address this, each frame of the actor’s 3D depth map is encoded, in real-time, into a 2D image via a depth range encoding method. These encoding methods restructure the depth map such that its values are robustly represented within the color channels of a regular RGB image. Each encoded frame is then transmitted within a low-latency, lossy 2D video stream via WebRTC. Typically, only 5-15 Mbps are needed to stream the encoded video data. The player’s virtual environment will receive the actor’s 2D video stream and recover the 3D data encoded within each frame. Finally, the 3D video will be placed within the player’s virtual environment to enhance co-presence between the player and the actor. In addition, once the reconstructed 3D video data is within the player’s virtual space, it can be realistically rendered with its color texture and/or post-processing/special effects can be implemented to fit with the theatrical story. In addition to the actor, the 3D video will capture any objects in the captured depth range. Thus, the actor also can bring physical props along with them into the virtual world.

In addition to increasing visual immersion for the players via live 3D video, a major part of this initiative is to develop a pipeline to deliver real-time audio from microphones and sources external to the VR headset. Much of our work involves live actors, live players, sound events, and haptics that are reactive to the unpredictable nature of live performance. With the addition of external digital sound routing, compression, and processing equipment, we will be able to improve the audio experience of the player in the VR world. Isolating the player’s audio experience from the real environment presents a number of technical challenges and more experimentation is required to find solutions which can be implemented in distributed performances.

Objective 2: Create technical and artistic solutions for multiple players, who are in remote physical locations distributed across the internet, to engage with and be co-present with live 3D actors in a networked virtual world.

Although our previous immersive theatre experiments have been successful, the immersive experience was limited to one person at a time. Others watched the performance from specified viewing areas as spectators. However, only a single individual could have a first-person, embodied experience of being in the VR performance. This severely limited the number of people who could have the immersive experience of exploring the virtual environment and interacting with the actors. In addition, because the VR was driven by a single computer, everyone involved (the actors, the player, the technicians, etc.) had to be physically co-located.

The current research incorporates live multiplayer VR. By integrating distributed gaming platforms such as Normcore or Photon, we aim to provide users in multiple locations with a networked virtual theatrical environment that enables shared experiences. For example, this will allow one remote player to interact with their environment or a prop in some way while a second remote player watches this action take place in real-time. Additionally, this development would allow for technicians to be remotely located and virtual elements such as the lighting, set, and environments could be updated via the same multiplayer framework.

With the addition of multiple distributed players, we will need to introduce avatars to represent the virtual presence of other players so they can see one another. Whereas actors will be represented by their true 3D forms via the live 3D video streaming, players will use simple, graphic avatars based on the position and orientation of the HMD and hand controllers. Such avatars are widely used in social VR applications.

Our initial research used the HTC Vive HMD and controllers. We are experimenting with the Oculus Quest and Normcore for the distributed version of the production. It offers a simple, self-contained platform that is easy to use, has very good tracking with high frame rates, does not require a tether to a high-performance PC, and is priced for a broader consumer market. This will allow for a larger audience.

In our initial productions, actors could see the player on the physical set and watch him or her on a computer monitor. After some practice, the actors gained a sense of how they and the player were oriented in the virtual world. This was important for directing their gaze toward the player to promote natural conversational interactions, and to gesture toward locations in the virtual world. With multiple players in remote locations, it is important to help actors know where they and all the players are in the virtual world. We are experimenting with a variety of ways to do this, including providing a third-person scene-view of the whole environment and a grid of player views. We are also exploring the use of our CAVE display system in Computer Science to situate the actor in the virtual world. The actor and depth-capture system will be placed in the CAVE. This will allow the actor to have a first-person view of the virtual world without having to wear an HMD so that the actor’s face can be captured by the Kinect.

Distributing and increasing the number of players requires not only technical advances but a new kind of script. In order to have multiple players engaged with one another, there must be an organic way for them to work together, and with the actors, toward a common goal. One approach is to organize them in teams – athletic, military, artistic, business, etc. Another is to have one or two players actively engaged in a journey while others offer advice, as occurs in some television game shows. We have developed a new experience as we have been working on technical advances. The two efforts have complemented and inspired one another, as they did in the development of Elevator #7.

Objective 3: Integrate live-event technology with VR technology to provide access to powerful and easy to use interfaces to interactively control lighting, sound, and video in VR.

Live productions such as the Superbowl Half-time Show, the Olympics opening ceremony, or any Broadway show, use live-event technology to design and interactively control thousands of lights, sounds, and image projections. Key to these productions are powerful human-computer interfaces that make it easy to interactively configure and control the properties of light and sound sources, to position the 3D video of the actor, and to cue/trigger events synchronized with performance.

We are creating systems to integrate these same interfaces used to design and control live performances into VR platforms to provide familiar and easy ways to manipulate possibly hundreds of parameters in real-time. Having interfaces that connect established theatrical creation tools to VR expands the ability of artists and technicians to quickly and intuitively create and control dynamic virtual worlds using established, professional tools which have been researched and developed over the last fifty years.

In our first round of performance as research, we used conventional VR development in Unity3D to manually control lighting, sound, and video planes through the built-in, general-purpose editor that allows parameters of individual lights and sound sources to be selected and adjusted. Where possible, Unity scripts were used to automatically trigger effects and events. We found these built-in tools cumbersome and inefficient to use for both configuring and cueing lights, sound, and actions. It was particularly difficult to adjust, on the fly, to unpredictable actions by the players. By bringing live performance tools into VR, we leverage established methods for lighting and sound control and cueing of events.

Lighting plays a key role in storytelling in film, gaming, animation, and theater. It can be used to signify meaning, to raise dramatic tension, and direct the attention of viewers. Designing effective lighting requires experimentation to visualize the interplay between light sources and object surfaces. While current game development environments, such as Unity3D and Unreal, provide convenient interfaces to place lights and define their properties, they do not have good ways to interactively manipulate complex lighting configurations and see the results in a VR display.

ETC Nomad is a standard tool for theatrical lighting designers and provides them with the ability to control thousands of lighting parameters. Each lighting look is constructed from lights with a variety of intensities, colors, positions, and textures. These lighting looks are then recorded as a single cue which can be played back from a control button. Cues can reference lighting effects and can have a defined duration or fade time. This software allows a designer to view dozens of variations or approaches to an environment over the course of a few minutes. Once the ideal lighting look is created, this cue can be easily recalled at a specific moment in time or triggered by an external action.

QLab is a widely used sound playback software in theatre and live-event productions. Over the past few years, QLab has also become a valuable system for controlling other networked devices. It can be used to trigger sound, lighting, cameras, video, and movements in real-time in a live interactive performance.

Our preliminary research has provided us with a few major challenges in this integration. First, when presenting to players using a variety of HMDs (e.g. Quest, Rift, Vive., etc.) we need to develop a process to deliver content which is predictable and similar for each participant. For example, we would like to vary the intensity, color, and beam spread of a lantern carried by a player in real-time as the player moves through different rooms in the experience. The ability to quickly adjust these parameters when developing the look of a scene is part of this goal. Second, it is important to minimize any additional latency introduced by the integration of the live-event technology.

QLab has a similar cue-based structure as ETC Nomad and has the ability to network with ETC Nomad in order to share commands and synchronize playback. ETC Nomad can generate real-time Art-Net packets. We have developed an interface that sends the Art-Net packets to an Art-Net Converter which converts the packets into a Normcore DataStore compatible data structure. The resulting lighting data is then distributed to the connected participants through the Normcore Server which we are already employing for 3D live video distribution.

To cue/trigger events inside Unity, QLab creates discrete commands via Open Sound Control (OSC), a popular UDP type networking communications protocol used in the performing arts, that controls the game state inside Unity. These commands are then received by an OSC converter which converts them into a socket.io message which distributes to all connected participants using a cloud server. Likewise, feedback from the Unity project goes through the same communication channel, arriving back to QLab in the form of an OSC command.

For audio, microphones capture the actor’s voice, and sound effects/music are fed through a mixing console before being fed into the streaming computer. The streaming computer then creates a WebRTC stream that includes audio channels along with the RGB and Depth data being transmitted for 3D video. This stream then is distributed to the participants Oculus Quests over the internet. The audio that originates from QLab routes to the mixing console where it then goes through the rest of the multimedia pipeline.

Funding to Date:

Teaching:

This project offers current and former students of the University of Iowa a diverse pedagogical and practical experience where they contribute their creative, technological and organization skills in faculty-led research. This research pays current students and alumni stipends, including support through ICRU (Iowa Center for Research by Undergraduates) funding. Graduate and undergraduate students from Art and Art History, Computer Science, Theatre Arts, Dance and Engineering have been active researchers on the project throughout.

This project provides multidisciplinary training to a diverse group of undergraduate and graduate students in theater, art, computer science, and engineering who work with each other and the investigators through all phases of the project. They learn to communicate across disciplinary boundaries and gain insight into the intellectual and cultural foundations of the other disciplines. We are committed to encouraging participation of a diverse group of students in this program of research. During the first two years of the project, three female undergraduate computer scientist students made major technical contributions to the project, one of whom was awarded an NSF Graduate Research Fellowship; and there have been four Black, Indigenous, and People of Color (BIPOC) students from the fields of 3D animation, theatre and computer science. We expect the project will continue to be attractive to women and BIPOC students in technical disciplines and we will recruit their participation.

Community Engagement:

If funded from the NSF, we will collaborate with a local community center, Dream City, that aims to inspire youths from diverse backgrounds through advocacy, wellness, and community connections. We have found that Virtual Reality is a powerful way to stimulate the interests of underrepresented youth to the computing field and to STEAM. We will distribute 15 Quest 2 headsets to youths aged 5-18 through the Dream City center. We will provide training sessions on how to setup and use the Quests and invite them to participate in performances and provide feedback on their experiences. Our short-term goals are to pique their interest in computing and to learn how they respond to the performances we create. We will also do demonstrations of the technology to youth summer programs on campus and to high schools in highly diverse areas of Iowa.

| Amount | Source | Note | Year |

|---|---|---|---|

| $ 12,000.00 | Creative Matches Grant, Office of the Vice President for Research and Economic Development. University of Iowa | Awarded to Co-PI's for project. | 2019-2021 |

| $ 1,000.00 | Iowa Center for Research by Undergraduates (ICRU). University of Iowa | One undergraduate researcher to work on the project during the fall semester at $1,000. | Fall 2021 |

| $ 8,000.00 | Iowa Center for Research by Undergraduates (ICRU). University of Iowa | For four undergraduate researchers to work on the project during the academic year at $2,000 each. | 2020 -2021 |

| $ 2,500.00 | Iowa Center for Research by Undergraduates (ICRU). University of Iowa | One undergraduate researcher to work on the project over the summer at $2,500. | Summer 2021 |

| $ 12,500.00 | Iowa Center for Research by Undergraduates (ICRU). University of Iowa | For five undergraduate researchers to work on the project over the summer at $2,500 each. | Summer 2020 |

| $ 13,000.00 | Creative Matches Grant, Office of the Vice President for Research and Economic Development. University of Iowa | Awarded to Co-PI's for project. | 2017-2018 |

| $ 3,000.00 | Iowa Center for Research by Undergraduates (ICRU). University of Iowa | Matching funds for Creative Matches Grant for undergraduate researchers to work on the project. | 2017-2018 |

| $ 52,000.00 | TOTAL TO DATE |

"The project represents the next generation of immersive theater, building on works such as the site-specific, interactive theatrical production Sleep No More, by Punchdrunk Theater Company in New York City, and mixed-reality performances that have premiered at film festivals around the world." Link to: Iowa Now Article

Press:

Supporting Documents:

Link to: First Creative Matches Grant Proposal

Link to: First Creative Matches Grant Award Letter

Link to: Second Creative Matches Grant Proposal

Link to: Second Creative Matches Grant Award Letter

Link to: Elevator #7 Script from Mabie Theatre Performances

Link to: Elevator #7 Script from Ohio State University Performances

Link to: OSU performance rider

Link to: Distributed Online Experience Story Outline

Link to: NSF submitted grant proposal

copyright 2021